Week 10 (August 4 - 18): It’s coming together

Inspired by the Greats

This week I’m going back to my AI functionality with renewed energy along with a new idea to implement: an AI chat where the user can bring any interior design vision to life.

To do this, I would need to create a new screen for the ‘AI Chat’ button at the bottom of my home screen. I’m taking design inspiration from popular language models like ChatGPT and Claude who have example prompts on the chat screen that the user can click if they aren’t sure what to type in their very first chat with the AI.

ChatGPT and Claude both provide example prompts to start you off on your first messages

In the design of my AI Chat screen, I’m designing in a similar way. I’m deciding to put example prompts at the top of the screen, with a textbox the user can type in at the bottom of the screen. I’m deciding to include three example prompts that generated styles unique between each other. The three example prompts I wrote are:

A cozy Scandinavian living room with light wood furniture, soft pastel accents, and a large bay window overlooking a snowy landscape.

An industrial loft bedroom with exposed brick walls, concrete floors, and large black-framed windows, featuring a mix of vintage leather furniture and sleek metal decor.

A futuristic home office with sleek, ergonomic furniture, floor-to-ceiling glass windows, minimalist white shelves, and a statement light fixture with LED accents.

The first prompt I intended a ‘Scandinavian’ decor style, the second I intended an ‘Industrial’ style and the third, a more futuristic feel.

These buttons don’t work yet because I first have to implement the textbox and actually send messages to the Stable Diffusion API. Which is what I’m figuring out next.

Back to Stable Diffusion

To allow the users to send messages to Stable Diffusion to generate images, I’m coding the textbox to allow the user to type in a user input. Then I’m sending this user input to Stable Diffusion as a prompt through their REST API. To do this, I’m creating a Stable Diffusion account, and then I’m using my Stability API key to connect my account my app. This way, whenever the user sends a message, it will use the credits from my account to generate images. Stable Diffusion has great documentation that showed me each step to this process. I had actually done this process already during Week 8 of my progress when I was originally using Stable Diffusion for generating floorplans.

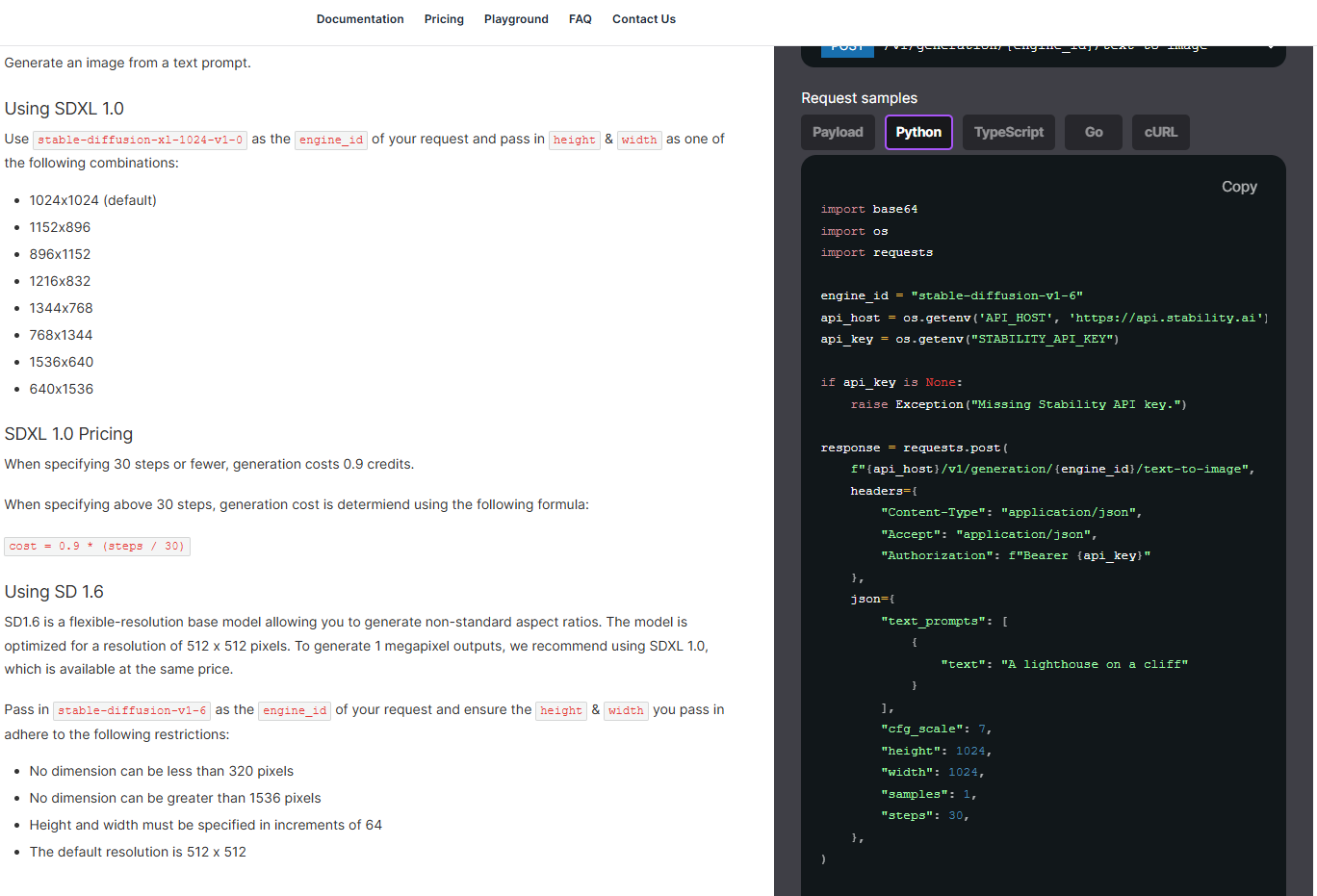

Stable Diffusion’s docs for new users to learn how to utilize their text to image AIs

My account, which is where I found my API key that I had first created in Week 8

From investigating their API docs, I found the image generation model ‘SDXL 1.0’ was the best for me due to it’s price. Per image it would cost around 0.9 credits, and according to their site, 1 credit = 1 cent. So when users will generate images, 1 image will cost me around 0.9 cents, which is such a cheap price.

API Docs for the SDXL 1.0 model, which is where I found the cost-per-message as well as an outline I could copy to call the model

I found the cost per credits on Stable Diffusion’s ‘Pricing Page’. 1 credit = $0.01

Now that I also had an example outline to use to call the AI model, I could essentially use this same prompt except in the ‘text_prompt’ variable, I’ll add the user’s text instead.

Precautions

However, around this time I had a thought. What if the user decided to input a message to my AI that wasn’t related to interior design? How would I guard against misuse of the AI Chat to Stable Diffusion, because Stable Diffusion can actually generate images of all kinds. Theoretically, a user could ask it to generate an image of a banana and it would do so.

To solve this potential issue, I’m deciding to first send the user’s message to a separate language model along with a prompt that tells the language model to say ‘yes’ if the user’s prompt asks about interior design, and ‘no’ if it doesn’t. This way, I basically turn the language model into an assistant that checks for me if I should allow the user’s prompt to send to Stable Diffusion or not. If the language model outputs ‘yes’, then I would send the user’s prompt, and if it outputs ‘no’, then I would show an error message to the user instead.

To do this I decided to use one of the models from GPT to act as this assistant.

By following OpenAI’s ‘Developer Quickstart’, I learned how to make my first API request to a GPT model, by first accessing my API key, and then following OpenAI’s template for making an API request.

OpenAI’s Developer Quickstart page, where I found out how to find my API key in my profile

This is the template I used to make a call to GPT 4o mini

I’m deciding to use GPT-4o-mini because as it was the cheapest model while being strong enough for my needs. GPT-4o-mini costs around $0.150 / 1M input tokens and $0.600 / 1M output tokens. 1 token is around 4 characters, so safe to say that this model was very cheap for a simple assistant like I needed.

pricing for GPT-4o-mini

Over the past 2 weeks by combining GPT-4o-mini as a prompt-checking assistant and Stable Diffusion’s text-to-image generation, I’m able to build the AI Chat screen that has a built-in guardrail for non-interior-design-related prompts.

I also connected my app to Google Admob, a service that allows you to add ads to your mobile apps. I coded it so that every time the user sent a message, they would be prompted to watch an ad. If they watched the ad, their prompt message would be sent through to Stable Diffusion. Here it is in action!

Next Steps

Next week, I plan to add a few more functionalities to my app, including the floorplan design screen, as well as a basic notification and profile screen! I also want to research how to upload my app to the Apple and Play Store since I am almost done coding all the features for my application.